What is Edge Computing?

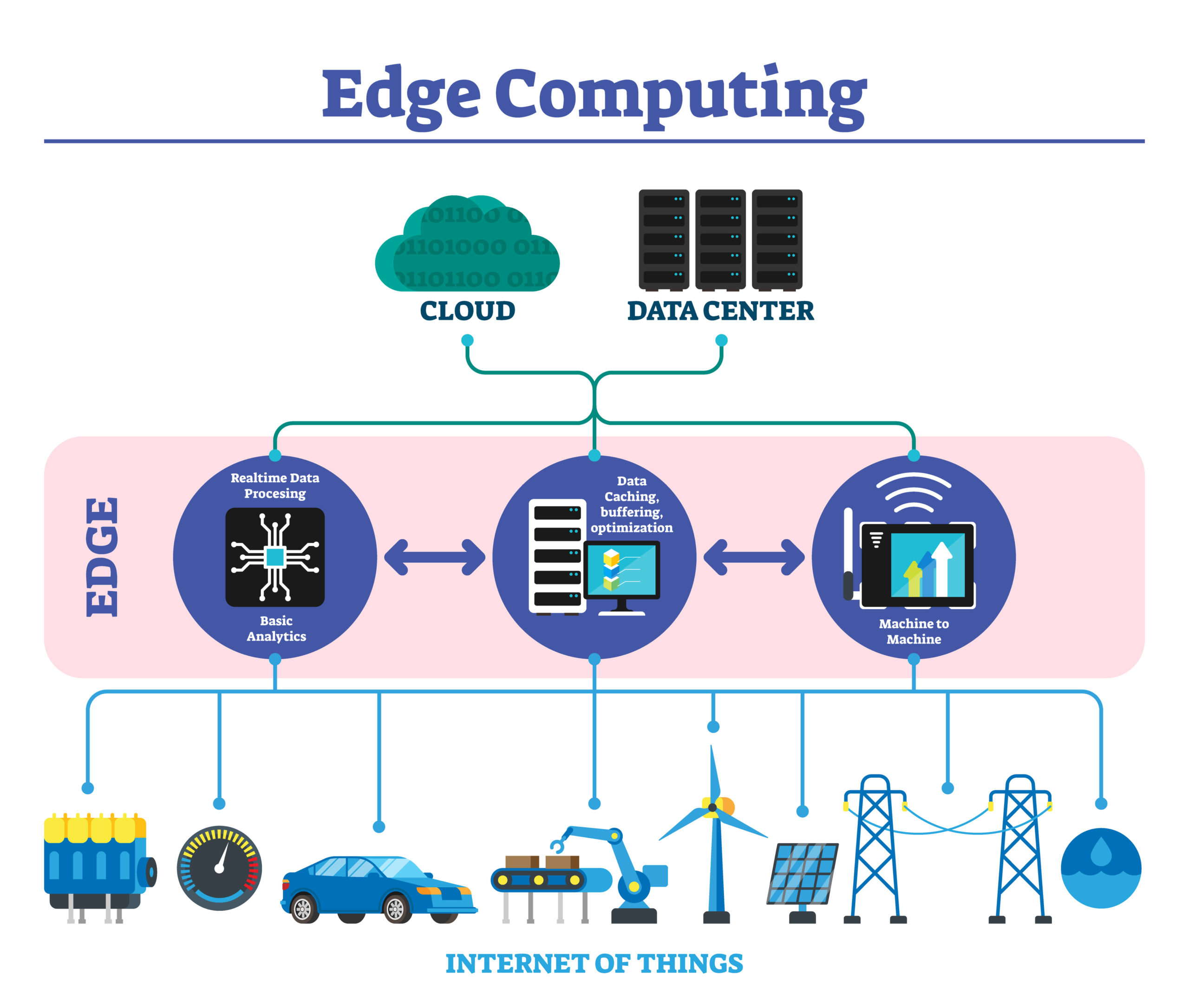

Edge computing refers to computing that pushes intelligence, data processing, analytics, and communication capabilities down to where the data originates, e.g., network gateways, directly at endpoints or where it is gathered and delivered to end-users. This reduces latency [1] by ensuring highly efficient networks and operations, improved user experiences, and service delivery. Companies live or die by their network performance and uptime. A company’s dependency on performance and reliability will only increase year after year. This is why cloud workloads will continue to shift to the edge.

Globally, the edge computing market is expected to reach USD 43.4 billion by 2027. 5G technology will act to facilitate edge computing market growth with new traffic demands and traffic patterns. The cloud leaders see the edge as a threat and have responded by heavily investing in the edge ecosystem with telecom providers. Server huggers are individuals or entities that resist moving into the cloud, clinging instead to their terrestrial computer servers. So, if you are clinging to the cloud today, you may soon be considered tomorrow’s cloud hugger, i.e., resisting moving into edge computing with all its capabilities and advantages, including the cost benefits.

Millions of Internet of Things (IoT) devices are scattered worldwide, collecting and transmitting petabytes of telemetry and audio/video to servers for analysis. In the consumer market, IoT is thought of as the ‘smart home,’ which would include internet-connected appliances, e.g., lighting fixtures, thermostats, home security systems, cameras, etc. Traditional fields of embedded systems, wireless sensor networks, control systems, automation, transportation systems, vehicular communication systems, medical devices, elder care, and others all contribute to the IoT. The bandwidth to carry the data costs millions, and excessive distance creates latency issues. To cut costs and improve quality, the data analytics servers were moved physically closer, with only relevant and essential data being transmitted through the cloud. This is Edge Computing.

Edge computing is a new paradigm for business operations. It’s a stripped-down data center physically closer to the IoT devices that reduce cost and latency. Edge computing is a powerful tool that brings autonomous cars, augmented reality, smart cities, and more into the future. This model for processing and analyzing data will grow over the coming years. The potential and reduced cost make it almost unavoidable.

Key Characteristics of Edge Computing Include:

- Computing power in the network or ‘on-premises’

- Proximity

- Real-time data processing

- Wide geo-distributions

What’s the Catch?

Companies relying on edge computing to save money and/or improve product quality need to be aware of the financial gains’ security risks. This “closer to the edge” deployment loses the physical and network security features brought to bear by larger data centers, and edge computing adds another hole to the porous enterprise perimeter (if the perimeter is not dead already). All the data stored in the edge (data previously transmitted to and stored in large, expensive data centers) is now at greater risk of exposure.

One use case for edge computing is the monitoring of energy platforms. Failures in nuclear, oil, gas, hydroelectric, and other energy generating platforms pose an elevated risk of major catastrophes. To maintain safety, IoT devices are scattered across remote locations collecting terabytes of data that then transmit to the cloud for analysis and monitoring. This method is expensive and less safe due to the long-haul data latency issues. Edge computing will push the analysis and safety monitoring downstream to the remote platforms creating real-time visibility and response.

With the addition of real-time visibility and response capabilities, not to mention the cost-saving, comes an increase in cybersecurity risk already discussed above. Events over the last five years have demonstrated the need to secure our means of energy production throughout the world. These platforms are attractive targets, and as edge computing is put into play, essential security is baked into the process.

To secure edge computing, make sure it’s part of your company’s Information Security program. Equip it with strong endpoint protection, multi-factor authentication, data encryption, and VPN tunneling. Ensure your IoT and edge computing devices are tracked in your asset management program and included in regular assessments like vulnerability scanning, penetration testing, red teaming, and other relevant modern security audits.

Modern technology, businesses, and competition are moving at blinding speeds. Here at Withum, we embrace new technologies that ensure a position of strength well into the future. We can help protect that future by ensuring your business makes well-informed decisions about its current and future infrastructure needs. Technology is critical to remain relevant and competitive in today’s environment.

[1] Latency in computing refers to the period of delay in transmission and/or processing, e.g., when one component of a system or an application is waiting for an action to be transmitted and/or executed by another component. Latency should not be confused with bandwidth; they are two very different things. Bandwidth measures the amount of data that can travel over a connection at one time. Latency, on the other hand, is a measurement of how long it takes for data to travel from its origin to its destination. No matter how fast a connection may be, data must still physically travel distance and often over various networks, which takes time, incurs costs, creates bottlenecks, limitations, performance, and reliability issues.

reach out to us.

Cyber and Information Security Services